Artificial intelligence has reached a tipping point. Everyone from your boss to your grandma is now using it. That’s true for technology vendors as well. Nearly every email security vendor now claims to offer “AI-powered” protection. But not all AI is created equal.

The AI Hype Problem

The cybersecurity industry has witnessed a surge of marketing-driven AI adoption. Unfortunately, many companies hastily added generic cloud-based large language models onto their existing platforms and rebranded them as innovative new AI solutions. This creates a challenge: distinguishing between purpose-built security intelligence and repurposed general-purpose AI systems.

While generic generative models are great at building content, they introduce unpredictability and inconsistency that don’t mesh well with real security needs. Purpose-built discriminative AI is designed specifically for threat classification and security contexts, delivering the reliable, deterministic results email protection demands.

Understanding the AI Types

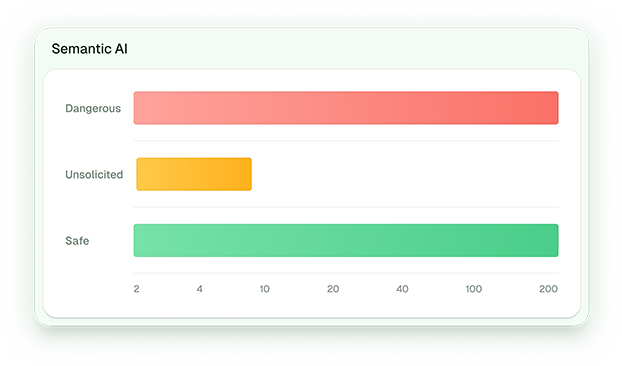

Generative AI (Large Language Models or LLMs) creates content and answers questions, but doesn’t give fixed answers—it calculates the most likely ones based on probability. Discriminative AI classifies inputs into specific categories with consistent results—the same email always receives the same threat classification. Semantic AI analyzes meaning, context, and intent rather than just keywords or structure, detecting threats based on logical inconsistencies in communication context.

For email security, determinism isn’t just preferable—it’s an imperative. The same suspicious message must always produce the same threat assessment, enabling consistent protection policies, reliable audit trails, and predictable incident response. When security teams depend on AI-driven decisions, variability is vulnerability.

SLM vs. LLM: The Technical Reality

Most generic LLMs throw around trillion-parameter models trained on everything from medieval history to random social media rants—a lot of useless noise for email security. Specialized SLMs (Small Language Models) like Libraesva’s take a different approach: 100 million parameters trained exclusively on real threat data. It’s like comparing a cybersecurity specialist who knows threats inside and out versus someone who knows a little bit about everything but isn’t a security expert.

LLMs need expensive graphics processing setups, constant cloud connections, and they’re slow—killing productivity when people are waiting for emails. SLMs work on the same computer hardware you’re probably already running, stay completely on your premises, and analyze threats in under a second. Training methodology proves that more isn’t always better. LLMs ingest massive datasets to learn broadly about everything. SLMs get laser-focused training on just 6,000 carefully selected, high-quality threat examples. The result? Better security performance from focused, domain-specific learning rather than the “spray and pray” approach.

When threats evolve, SLMs adapt quickly through rapid model updates. The base model provides 100-language semantic understanding, while a fine-tuning layer handles email threat classification. Updates require just hours for retraining and minutes for deployment—giving you agility that massive LLM retraining can’t match.

Privacy and Performance Benefits

Cloud LLM deployments expose sensitive email content to third-party services, creating compliance risks under GDPR and data residency requirements. Network latency and service dependencies introduce delays that disrupt email workflows. On-premises semantic AI eliminates these concerns—complete data control, sub-second processing on standard computer hardware, and no external dependencies.

Local deployment reduces attack surface area by eliminating cloud API vulnerabilities and vendor dependencies. Integration with existing gateway infrastructure is easy, running parallel to current security stacks including Libraesva’s Adaptive Trust Engine, sandboxing, and antivirus systems without workflow disruption.

Unlike standalone solutions, semantic AI integrates as a complementary layer to your existing systems like Adaptive Trust Engine. It selectively engages only when messages require deeper inspection, using processing power where it matters most.

The Bottom Line

While competitors tack generic cloud LLMs onto existing platforms and call it “email AI,” Libraesva’s purpose-built semantic AI represents an elevated approach to AI-powered email security. CPU-only processing, domain-specific accuracy, and privacy-by-design architecture demonstrate security-first thinking over the common “marketing hype” AI solutions.

Context-aware security moves beyond signature detection to understand communication intent and semantic anomalies.